Adilbek Karmanov

I am a Research Engineer at the Metaverse Center, MBZUAI in Abu Dhabi, advised by Prof. Hao Li. My work focuses on 3D generation from limited views, real-time reconstruction, and neural rendering for immersive systems such as VR and large curved LED displays. My research interests are in 3D computer vision and graphics, particularly in unifying human and scene reconstruction under real-time representations (NeRF, 3DGS), and in integrating generative models such as diffusion and flow-matching to enhance fidelity, consistency, and interactivity in 3D synthesis.

I received my M.Sc. in Computer Vision from MBZUAI under the supervision of Prof. Shijian Lu, where my thesis explored test-time adaptation in vision–language models. I earned my B.Sc. in Computer Science from Suleyman Demirel University, with an exchange term at UNIST.

Hallstatt, Austria

Experience

Publications

-

SOAP: Style-Omniscient Animatable PortraitsSIGGRAPH 2025

SOAP turns a single stylized 2D photo into a fully rigged, high-resolution 3D avatar with consistent geometry, texture, and animation-ready features.

-

DiffPortrait360: Consistent Portrait Diffusion for 360° View SynthesisCVPR 2025

Diffportrait360 generates fully consistent 360° head views from a single portrait, handling humans, stylized characters, and anthropomorphic forms with accessories.

-

VOODOO XP: Expressive One-Shot Head Reenactment for VR TelepresenceSIGGRAPH Asia 2024 (Journal Track)

VOODOO XP enables real-time, 3D-aware head reenactment from a single photo and any driver video, delivering expressive, identity-preserving, and view-consistent avatars for immersive VR telepresence.

-

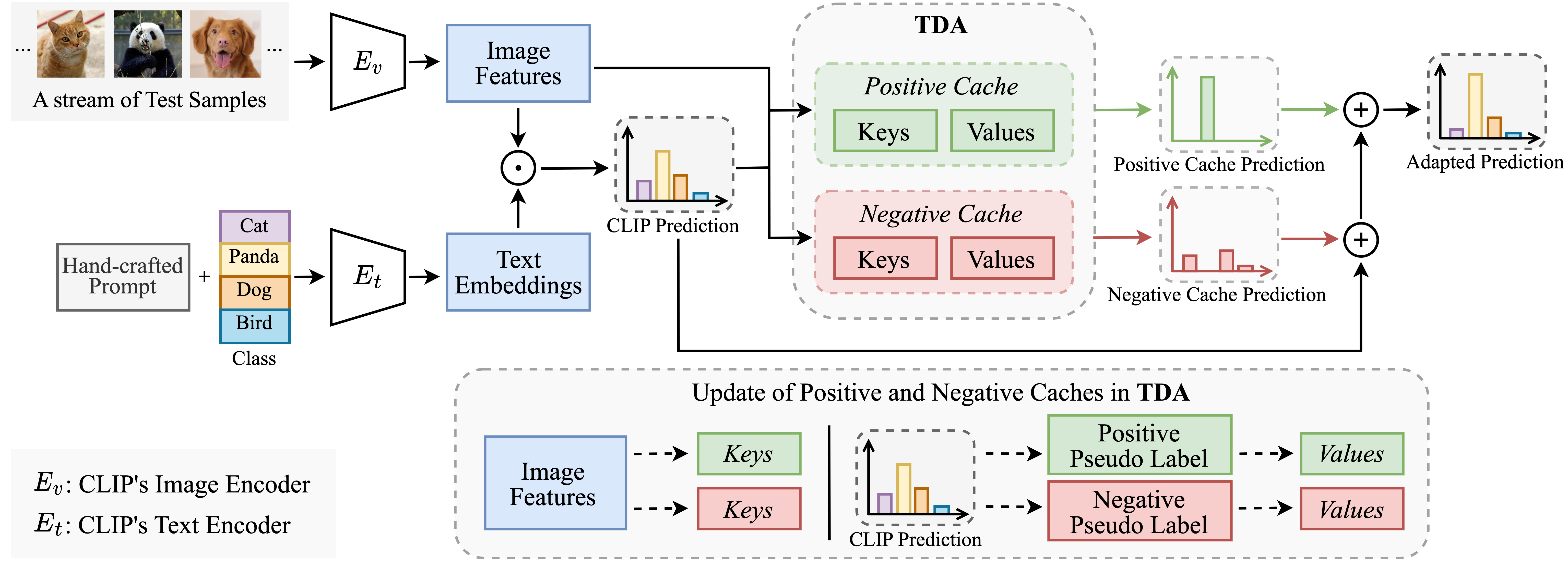

Efficient Test-Time Adaptation of Vision-Language ModelsCVPR 2024

TDA is a training-free dynamic adapter that enables efficient test-time adaptation of vision-language models through progressive cache updates and negative pseudo labeling, achieving state-of-the-art results without backpropagation.

Demos

-

VOODOO VR: One-Shot Neural Avatars for Virtual RealitySIGGRAPH 2024 Real-Time Live!

We present a complete solution for real-time immersive face-to-face communication using VR headsets and photorealistic neural head avatars generated instantly from a single photo.